- Joined

- Jan 8, 2019

- Messages

- 56,608

- Solutions

- 2

- Reputation

- 32

- Reaction score

- 100,454

- Points

- 2,313

- Credits

- 32,600

6 Years of Service

76%

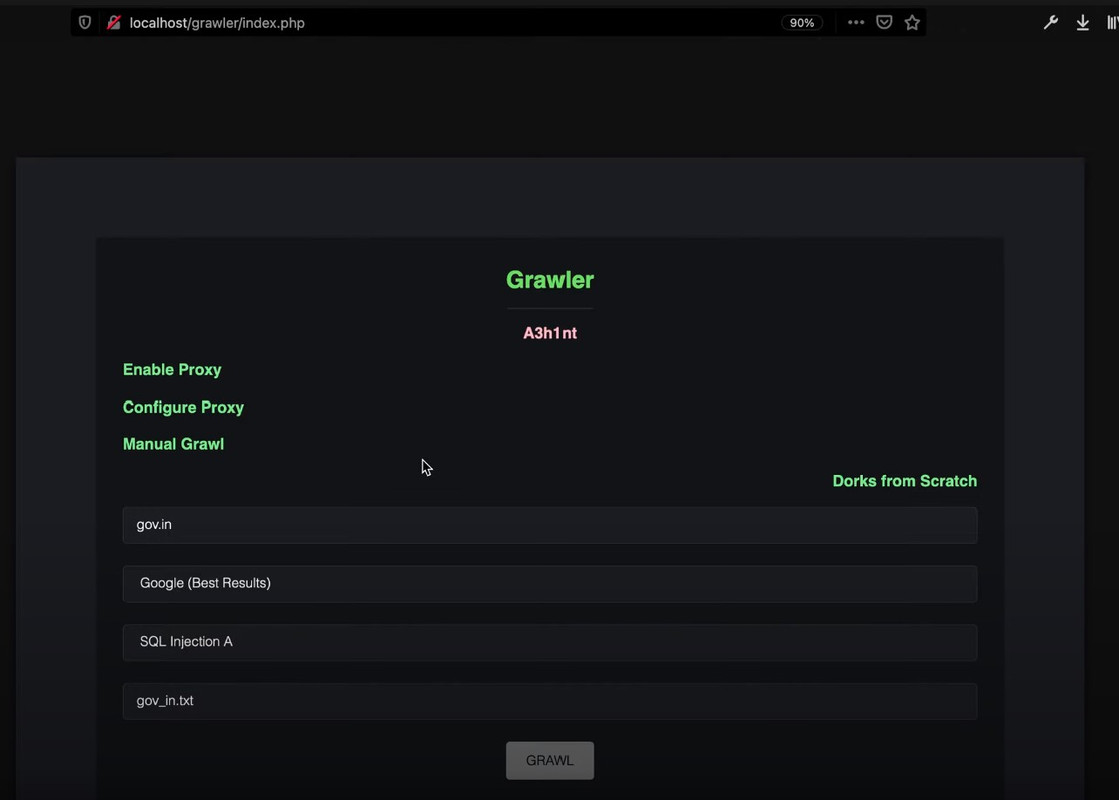

Grawler is the best tool ever, made for automating google dorks it’s a tool written in PHP which comes with a web interface that automates the task of using google dorks, scrapes the results, and stores them in a file, version 1.0 is the more powerful than ever supporting multiple proxies. ( Read in features )

Grawler aims to automate the task of using google dorks with a web interface, the main idea is to provide a simple yet powerful tool that can be used by anyone, the thing that makes Grawler different in its category is its features.

Features

The biggest issue faced by tools that automate google dorks is CAPTCHA, but with Grawler, CAPTCHA is not an issue anymore, Grawler comes with a proxy feature that supports three different proxies.

Supported Proxies ( The mentioned proxies need you to signup and get the API key, without any credit card information and give you around one thousand free API calls each )

ScraperAPI

Scrapingdog

Zenscrape

Grawler now supports two different modes.

Automatic Mode: Automatic mode now comes with many different dork files and supports multiple proxies to deliver a smooth experience.

Manual Mode: The manual mode has become more powerful with the Depth feature, now you can select the number of pages you want to scrape results from, proxy feature is also supported by manual mode.

Dorks are now categorized in the following categories:

Error Messages

Extension

Java

JavaScript

Login Panels

.Net

PHP

SQL Injection (7 different files with different dorks)

My_dorks file for users to add their own dorks.

API keys for proxies are first validated and added to the file.

Manual mode allows users to go up to depth 4, but I’d recommend using depth 2 or 3 because the best results are usually on the initial pages.

Grawler comes with its own guide to learn google dorks.

The results are stored in a file ( filename needs to be specified with txt extension ).

URL scraping is better than ever with no garbage URL’s at all.

Grawler supports three different search engines are supported (Bing, Google, Yahoo), so if one blocks you another one is available.

Multiple proxies with multiple search engines deliver the best experience ever.

To see this hidden content, you must like this content.