- Joined

- Jan 8, 2019

- Messages

- 56,608

- Solutions

- 2

- Reputation

- 32

- Reaction score

- 100,454

- Points

- 2,313

- Credits

- 32,600

6 Years of Service

76%

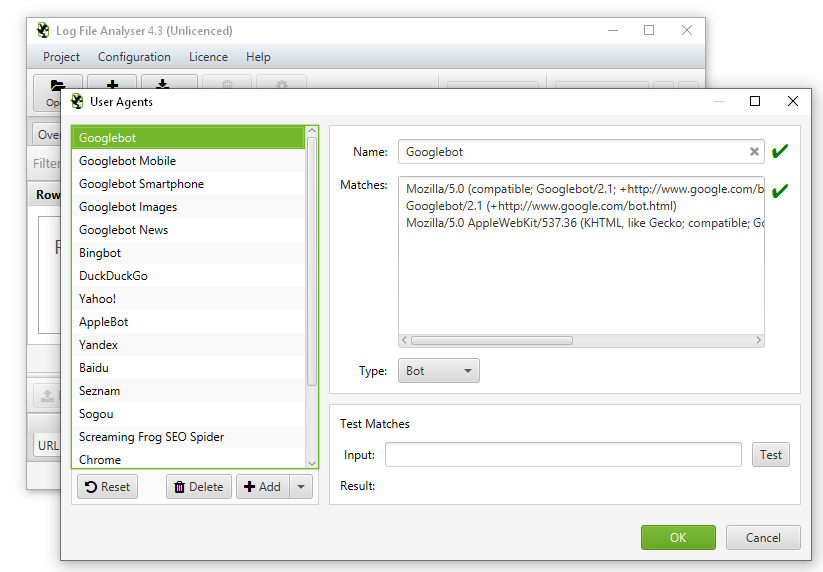

Log File Analyser

The Screaming Frog SEO Log File Analyser allows you to upload your log files, verify search engine bots, identify crawled URLs and analyse search bot data and behaviour for invaluable SEO insight. Download for free, or purchase a licence to upload more log events and create additional projects.

What can you do with the SEO Log File Analyser?

The Log File Analyser is light, but extremely powerful – able to process, store and analyse millions of lines of log file event data in a smart database. It gathers key log file data to allow SEOs to make informed decisions. Some of the common uses include –

Identify Crawled URLs

View and analyse exactly which URLs Googlebot & other search bots are able to crawl, when and how frequently.

Discover Crawl Frequency

Get insight to which search bots crawl most frequently, how many URLs are crawled each day and the total number of bot events.

Find Broken Links & Errors

Discover all response codes, broken links and errors that search engine bots have encountered while crawling your site.

Audit Redirects

Find temporary and permanent redirects encountered by search bots, that might be different to those in a browser or simulated crawl.

Improve Crawl Budget

Analyse your most and least crawled URLs & directories of the site, to identify waste and improve crawl efficiency.

Identify Large & Slow Pages

Review the average bytes downloaded & time taken to identify large pages or performance issues.

Find Uncrawled & Orphan Pages

Import a list of URLs and match against log file data, to identify orphan or unknown pages or URLs which Googlebot hasn't crawled.

Combine & Compare Any Data

Import and match any data with a 'URLs' column against log file data. So import crawls, directives, or external link data for advanced analysis.

Code:

https://www.screamingfrog.co.uk/log-file-analyser/

To see this hidden content, you must like this content.